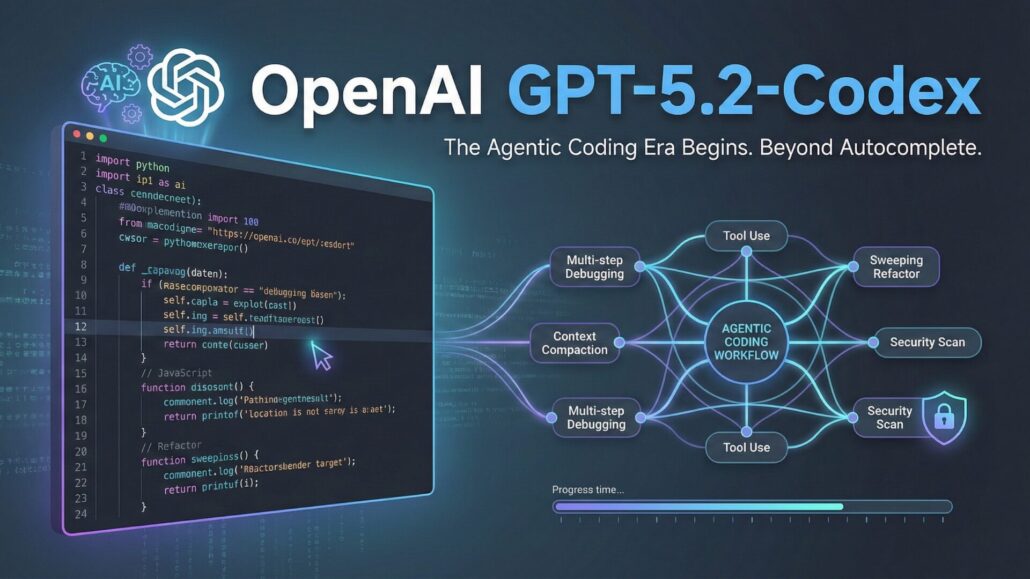

GPT-5.2-Codex Adds More Agentic Power And More Cybersecurity Guardrails

The new model isn’t just writing snippets anymore. It’s trying to handle the messy reality of software engineering.

OpenAI just pulled the curtain back on GPT-5.2-Codex, a specialized variant of its flagship model designed to move past “autocomplete” and into the era of the autonomous coding agent. While previous models were great at churning out a single Python function or a clever CSS trick, OpenAI is pitching GPT-5.2-Codex as a model built for the long haul: multi-step debugging, massive refactors, and the kind of sprawling migrations that usually give developers a headache.

If you’re a paid ChatGPT user, you can play with it inside Codex right now. API access is slated for “the coming weeks,” and the company is still leaning heavily into the Codex CLI for those who want an AI agent living directly in their terminal.

Beyond the snippet

The industry has been buzzing about “agentic” AI for months, but GPT-5.2-Codex represents OpenAI’s most concerted effort to make that a reality. In the world of AI, “agentic” means the model doesn’t just respond to a prompt and stop; it plans, uses tools, and iterates until the job is done.

OpenAI says the new model addresses the specific ways current AI agents usually fail. We’ve all seen an AI lose the plot halfway through a long conversation. To fix this, GPT-5.2-Codex introduces “context compaction” and improved long-session coherence. Essentially, the model is better at remembering the original goal even after it has spent an hour digging through your messy codebase.

There are also some surprising “quality of life” upgrades here. OpenAI explicitly highlighted improved Windows-native agentic coding and better vision capabilities. That means the agent can now “see” things like error dialogs or UI glitches that don’t always show up in a standard text log, allowing it to handle more of the visual friction of daily dev work.

Benchmarking the “gritty stuff”

Benchmarks are often the weakest part of an AI reveal, usually focusing on sterile, “LeatCode” style problems. OpenAI is trying to change that narrative by pointing to two specific metrics:

- SWE-Bench Pro: A hardened version of the software engineering benchmark that tests whether a model can actually resolve real-world issues in existing repositories.

- Terminal-Bench 2.0: This focuses on the “gritty” work: configuring environments, running builds, and debugging in a live terminal.

OpenAI claims GPT-5.2-Codex excels here because it isn’t just guessing the next line of code; it’s reasoning through the execution.

The Cybersecurity tightrope

Perhaps the most interesting—and controversial—angle is OpenAI’s focus on cybersecurity. The company is positioning GPT-5.2-Codex as a powerful tool for defensive security, capable of spotting vulnerabilities and analyzing exploits better than any of its predecessors.

But OpenAI isn’t just letting anyone use these features for whatever they want. They’re launching an invite-only “trusted access” pilot for vetted security professionals. It’s a clear admission that a model better at finding bugs to fix them is, by definition, also better at finding bugs to exploit them. By OpenAI’s own internal framework, the model doesn’t hit “High” cyber capability yet, but the guardrails are clearly tightening as the capability climbs.

Tony’s Take: Reliability over Hype

The most important upgrades in GPT-5.2-Codex aren’t the ones that make for a flashy TikTok demo. They’re the unsexy ones.

If this model can actually handle tool-calling without “gaslighting” the developer—meaning it doesn’t hallucinate API outputs or forget its own previous steps—it moves from being a toy to a tool. We’re reaching a point where these models are starting to feel less like a search engine and more like a junior teammate.

The real test, however, starts when that API opens up. Once developers start plugging GPT-5.2-Codex into their own CI/CD pipelines and custom agents, we’ll see if those “long-horizon” stability claims actually hold up on a Tuesday morning when the production server is screaming.

What to Watch

- API Rollout: Expect a flood of new “AI Engineer” startups the moment the API hits.

- The “Trusted Access” Vetting: It remains to be seen how transparent OpenAI will be about who gets the “keys to the kingdom” for security work.

- Windows Workflows: Seeing a specific callout for Windows is rare in the AI space; it suggests OpenAI is looking to capture the enterprise dev market where Windows still reigns supreme.

Leave a Reply

Want to join the discussion?Feel free to contribute!